Facebook suspends Cambridge Analytica

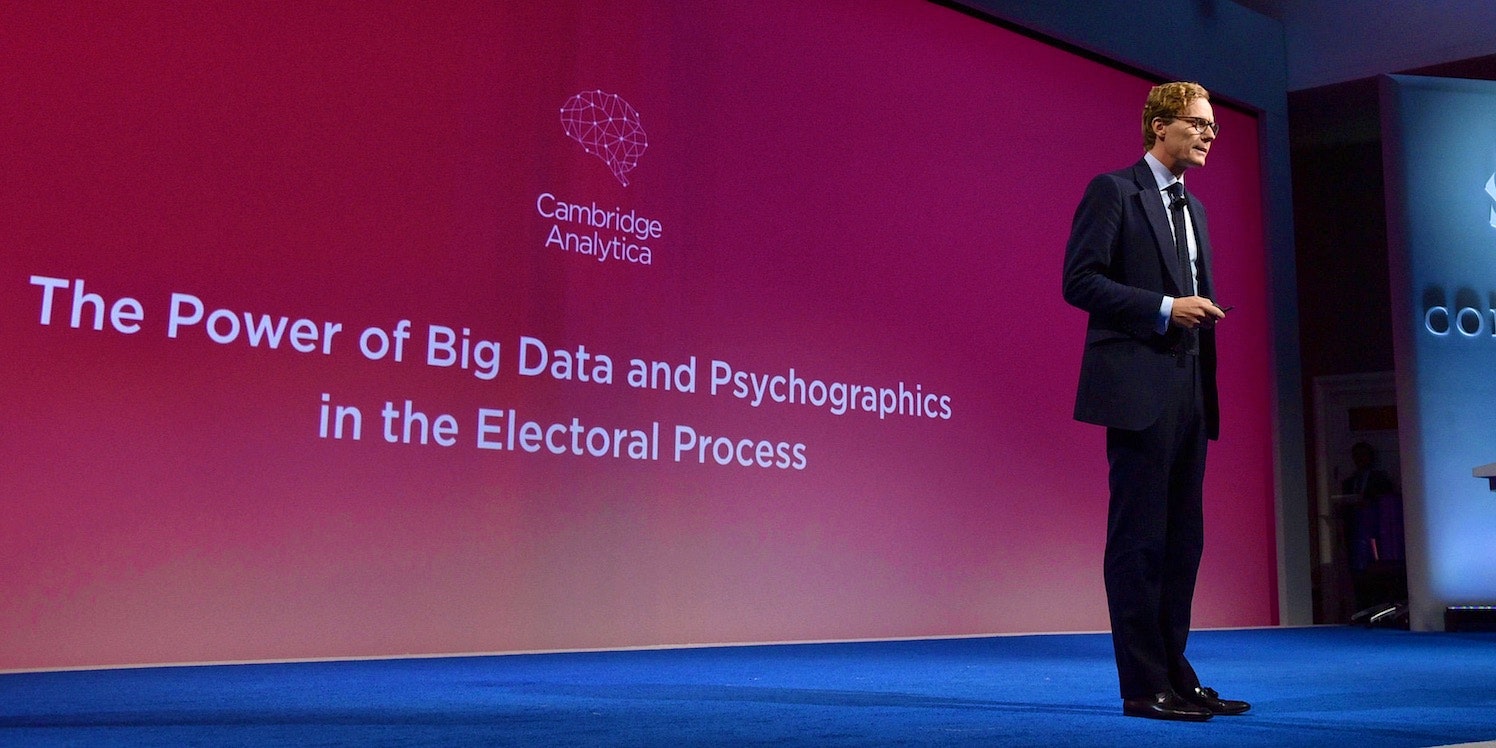

A year ago I wrote about how big data, collected from social media sites, and machine learning was used to influence voting in the United States in The man least likely to succeed in politics. Collecting big data, and more specifically social data about each of us, has been described as the "new goldrush". Yet many of us are unaware of how technology is enabling the use (and misuse) of our personal data. As a technologist I find this area fascinating and evolving rapidly.

In the article I discussed how the Trump campaign used a firm named Cambridge Analytica to identify potential voters and to influence them. It was shown that by harvesting and studying a person's Facebook profile and "likes" researchers can know them better than their spouse.

Now, Facebook announced that it has suspended Cambridge Analytica over concerns that it and other parties improperly obtained and stored users' personal information. Facebook admits it knew about this issue in 2015, prior to the election.

In 2015, we learned that a psychology professor at the University of Cambridge named Dr. Aleksandr Kogan lied to us and violated our Platform Policies by passing data from an app that was using Facebook Login to SCL/Cambridge Analytica, a firm that does political, government and military work around the globe.

— Facebook, "Suspending Cambridge Analytica and SCL Group from Facebook", March 16, 2018

Cambridge Analytica has gained attention for its work with the Trump presidential campaign as well as a campaign supporting Britain's exit from the European Union. Cambridge Analytica has been scrutinized for ties with Julian Assange/WikiLeaks and the Mercer family. Rebekah Mercer and her father, hedge-fund billionaire Robert Mercer, are part owners of Cambridge Analytica. The Mercer family is among the top Trump donors, and helps fund the conservative website Breitbart.

Concurrently during 2015, Breitbart went from a medium-sized site with a small Facebook page of 100,000 likes into a force shaping the election with almost 1.5 million likes. By July, Breitbart had surpassed The New York Times’ main account in interactions. By December, it was doing 10 million interactions per month, about 50 percent of Fox News, which had 11.5 million likes on its main page.

Steve Bannon introduced Cambridge Analytica (where allegedly he was chairman of the board) to the Trump campaign in mid-May 2016. During the campaign, Cambridge Analytica provided data, polling and research services. Mr. Bannon became the campaign’s chief executive officer in August 2016 and later joined the White House as a top strategist (He left the administration in August 2017).

Back in 2015, prior to the election, we knew:

- We had a multi-billion-person platform that competitively dominated media distribution.

- That fake news and misinformation was rampaging across the internet generally and Facebook specifically.

- About the Russian disruptive information operations.

All of these things were known by various parties and yet no one could quite put it all together: Facebook data was being harvested and "weaponized" to target voters, then Facebook (and Twitter's) platform was being used to disseminate false and misleading data. Facebook profited by taking a very big chunk of the estimated $1.4 billion worth of digital advertising purchased during the election.

After the election Jonathan Albright, research director of the Tow Center for Digital Journalism at Columbia University, pulled data on six publicly known Russia-linked Facebook pages. He found that their posts had been shared 340 million times. And those were just 6 of 470 pages that Facebook has linked to Russian operatives.

Why is Facebook acting now?

In December, Special Counsel Robert Mueller requested emails from Cambridge Analytica in connection with its investigation into Russian interference in the 2016 U.S. election, the Wall Street Journal reported. The Journal also reported the emails had earlier been turned over to the House Intelligence Committee.

Rep. Adam Schiff (D., Calif.), the top Democrat on the House Intelligence Committee, told the Journal that ties between Cambridge Analytica and WikiLeaks were of “deep interest” to the committee.

Christopher Wylie, a former Cambridge Analytica employee has come forward recently as a whistle-blower. He described the election influencing in stark terms:

“We exploited Facebook to harvest millions of people’s profiles. And built models to exploit what we knew about them and target their inner demons. That was the basis the entire company was built on.”

— Christopher Wylie, former Cambridge Analytica employee

This raises new questions about Facebook’s role in targeting voters in the US presidential election. It comes only weeks after indictments of 13 Russians by special counsel Robert Mueller which stated they had used the platform to perpetrate “information warfare” against the US.

It is possible that either the House Intelligence Committee, or Robert Muller's investigation has determined conclusively that Cambridge Analytica used Facebook data to influence voters, hence forcing Facebook's hand to finally admit what it knew and suspend Cambridge Analytica from it's platform.

How do we control this?

The real point here is this is how Facebook is designed to work. Facebook is designed to help advertisers with profiling and micro-targeting. All Cambridge Analytica did was understand how Facebook works, and use it. Unless Facebook changes its business model, it will be used as intended, and in particular ways that influence politics.

What is more, it is only going to get more effective. Facebook is gathering more data all the time – including through its associated operations in Instagram, WhatsApp and more. Its analyses are being refined and becoming more effective all the time – and more people like Cambridge Analytica are becoming aware of the possibilities and how they might be used.

I believe this technology has outpaced our legal system, and proper and appropriate controls are being left to the platforms themselves, who clearly have an economic incentive to look the other way. It is time to shine a light on these practices and educate and protect our citizens. On March 18, Senator Amy Klobuchar (D-Minnesota) tweeted that Facebook CEO Mark Zuckerberg should testify in front of the Senate Judiciary Committee and "It’s clear these platforms can’t police themselves":

It is past due to create a legal framework that manages how our personal data is collected and used. A good framework would, at a minimum, address these points:

- First, data collection must be disclosed in clear and plain language.

- Second, the use of the data must be disclosed. If our data is being used for marketing purposes, or political influence, those uses must be disclosed.

- Third, it must be equally easy to opt out as it is opt in, at any time.

- Finally, users must be immediately notified of any inappropriate collection, retention, and use of their data by third parties.

Europe is far ahead of the United States. After four years of preparation and debate the EU enacted the "General Data Protection Regulation", known as GDPR. It is the most important change in data privacy regulations in the 21st century, and it is taking effect very soon, on May 25, 2018. GDPR is wide-ranging in scope and includes the concept of "Privacy by Design".

At it’s core, privacy by design calls for the inclusion of data protection from the onset of the designing of systems, rather than an addition. Consent has also been strengthened. Consent must be clear and distinguishable from other matters and provided in an intelligible and easily accessible form, using clear and plain language. It must be as easy to withdraw consent as it is to give it.

It's clear the US needs a similar regulatory structure as our current one is lacking in so many ways.

UPDATE 03/19/2018: Facebook Executive Planning to Leave Company Amid Disinformation Backlash Alex Stamos, Facebook’s chief information security officer, plans to leave Facebook by August. He had advocated more disclosure around Russian interference of the platform and some restructuring to better address the issues, but was met with resistance by colleagues, said the current and former employees. In December, Mr. Stamos’ day-to-day responsibilities were reassigned to others.

References

- Politico: Facebook suspends Trump-affiliated data firm over privacy concerns

- Facebook: Suspending Cambridge Analytica and SCL Group from Facebook

- WSJ: Mueller Sought Emails of Trump Campaign Data Firm

- 50 million Facebook profiles harvested for Cambridge Analytica in major data breach

- How Trump Consultants Exploited the Facebook Data of Millions

- Facebook Executive Planning to Leave Company Amid Disinformation Backlash

- The Cambridge Analytica scandal isn’t a scandal: this is how Facebook works

- Cambridge Analytica filmed suggesting bribes and sex workers to entrap politicians

- The EU General Data Protection Regulation (GDPR)

- Making sense of the Facebook and Cambridge Analytica nightmare